I've also heard a rumor that there is beer in Philadelphia, which I will be investigating in a professional capacity both Friday and Saturday nights. I look forward to seeing many of you there.

Ipod Touch Review

# # 8 GB capacity for about 2,000 songs, 10,000 photos, or 10 hours of video # Up to 40 hours of audio playback or 7 hours of video playback on a single charge # Support for AAC, Protected AAC (iTunes Store) and other audio formats; H.264, MPEG-4,

Ipod Touch Review

# One-year limited warranty # iPod touch has 8 GB capacity for about 2,000 songs, 10,000 photos, or 10 hours of video. # iPod touch has a 3.5-inch (diagonal) widescreen Multi-Touch display with 960-by-640-pixel resolution (326 pixels per inch). › See more technical details.

Ipod Touch Review

# iPod touch has one-year limited warranty. # IPod touch plays up to 40 hours of audio playback or 7 hours of video playback on a single charge. # Motion JPEG video codecs in M4V, MP4, MOV, and AVI formats

Rabu, 13 Oktober 2010

Reminder: Voices That Matter this weekend

I'm getting into Philadelphia on Friday. I'm driving so don't have an exact arrival time, but probably late afternoon. I'm giving two presentations, one on Multitasking on Saturday at 3:00pm and one on OpenGL ES 2.0 at 1:00pm on Sunday. I'm also sitting in on the "My Year of Going Indie" panel at 6:00pm on Saturday with some awesome people, including Dan Pasco of Black Pixel and Daniel Jalkut, the author of the program I'm typing this post in.

I've also heard a rumor that there is beer in Philadelphia, which I will be investigating in a professional capacity both Friday and Saturday nights. I look forward to seeing many of you there.

I've also heard a rumor that there is beer in Philadelphia, which I will be investigating in a professional capacity both Friday and Saturday nights. I look forward to seeing many of you there.

Sabtu, 09 Oktober 2010

Tile Cutter Open Sourced

I have open-sourced Tile Cutter under the MIT license and put it on Github as a public project. This application was written extremely quickly (less than half a day) because I needed the functionality for a client project, so there are several things about this application that are klugey, including some uncomfortable crossing of the boundaries between what a view should do and what a controller should.

But, it works, and I don't see myself having time to go clean it up anytime soon, so I'm donating it to the public good, warts and all. I welcome back any enhancements, bug fixes, or modifications, but you are not required to give anything back under the license.

But, it works, and I don't see myself having time to go clean it up anytime soon, so I'm donating it to the public good, warts and all. I welcome back any enhancements, bug fixes, or modifications, but you are not required to give anything back under the license.

Jumat, 08 Oktober 2010

Cutting Large Images into Tiles for UIScrollView

If you ever need to display an obscenely large image on an iOS device using a UIScrollView, there's a session available in the WWDC 2010 videos that will show you exactly how to do it without eating up all of your available memory. The basic idea is that you have multiple versions of your image stored at different scales, and you chop each of them up into tiles and use a CATiledLayer to display them. That way, you don't have to maintain the entire obscenely large image in memory all at once and your app's scroll performance stays snappy.

Of course, you have to get the images chopped up into tiles in order to use this technique.

There's a great command-line open source tool called ImageMagick that will, among other things, chop an image up into tiles that can be used for this purpose. Unfortunately, I didn't find ImageMagick very useful for really, really large images (over around 100 megs). The program would just sit and churn and fill up my hard drive with gigs of swap space without generating any tiles. It worked fine for smaller images, but I needed something that would work on big ones.

So, I went looking for an alternative tool without much luck. There are a handful of commercial tools that will do this, but the ones I found were for Windows. I know Photoshop has the ability to do this with slices, but I didn't want to go down that route for a couple of reasons, not the least of which is that I don't have a current version of Photoshop and don't want to give Adobe any money.

After some fruitless Googling, I decided to take advantage of the fact that I'm a programmer, and I rolled my own app to do this. It was nice to be back in the Cocoa APIs, but I must admit that they feel a little krufty compared to the iOS APIs. Anyway, I call the program "Tile Cutter". It's not the most original name, I admit, but it was developed as an in-house product and didn't need a witty title.

Tile Cutter is rather bare bones and was developed in about a half-day, but it seems to work well. I was able to slice up a 1.3 gig image (yes, really) into 400x400 chunks in about 15 minutes on my laptop. I would imagine I'm not the only person who needs to slice up images to use in UIScrollView, so I've decided to release Tile Cutter as a free utility (of course, with no warranties or whatnot). I will also be releasing the source code on GitHub once I've had a chance to clean up the code a little.

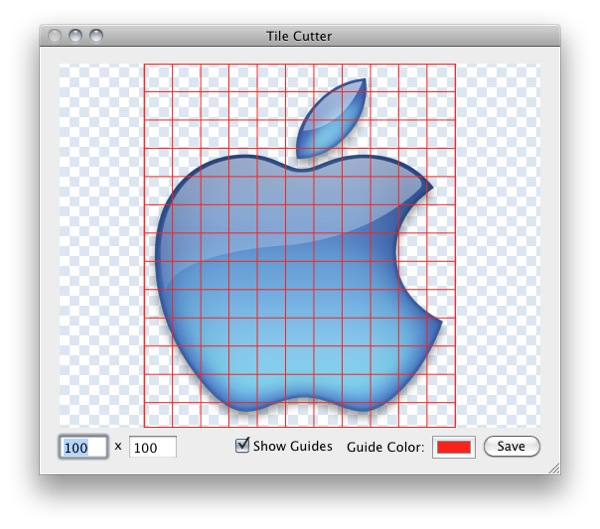

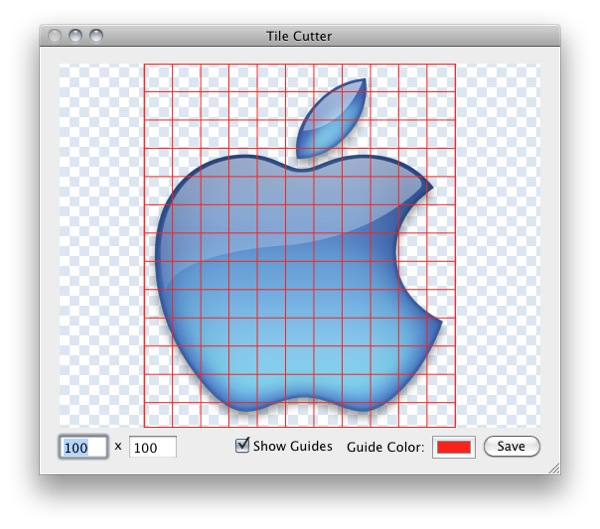

Here's a screenshot of the main interface:

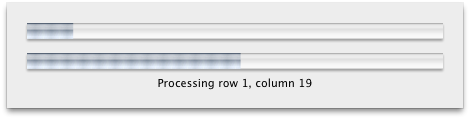

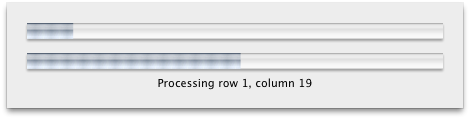

And the progress bars that display in a sheet when Tile Cutter's churning away. You'll actually only see the progress bars on very large images, however, since it operates quite quickly on more reasonably sized images.

Download Tile Cutter 1.0. Feedback, as always, is welcome.

Of course, you have to get the images chopped up into tiles in order to use this technique.

There's a great command-line open source tool called ImageMagick that will, among other things, chop an image up into tiles that can be used for this purpose. Unfortunately, I didn't find ImageMagick very useful for really, really large images (over around 100 megs). The program would just sit and churn and fill up my hard drive with gigs of swap space without generating any tiles. It worked fine for smaller images, but I needed something that would work on big ones.

So, I went looking for an alternative tool without much luck. There are a handful of commercial tools that will do this, but the ones I found were for Windows. I know Photoshop has the ability to do this with slices, but I didn't want to go down that route for a couple of reasons, not the least of which is that I don't have a current version of Photoshop and don't want to give Adobe any money.

After some fruitless Googling, I decided to take advantage of the fact that I'm a programmer, and I rolled my own app to do this. It was nice to be back in the Cocoa APIs, but I must admit that they feel a little krufty compared to the iOS APIs. Anyway, I call the program "Tile Cutter". It's not the most original name, I admit, but it was developed as an in-house product and didn't need a witty title.

Tile Cutter is rather bare bones and was developed in about a half-day, but it seems to work well. I was able to slice up a 1.3 gig image (yes, really) into 400x400 chunks in about 15 minutes on my laptop. I would imagine I'm not the only person who needs to slice up images to use in UIScrollView, so I've decided to release Tile Cutter as a free utility (of course, with no warranties or whatnot). I will also be releasing the source code on GitHub once I've had a chance to clean up the code a little.

Here's a screenshot of the main interface:

And the progress bars that display in a sheet when Tile Cutter's churning away. You'll actually only see the progress bars on very large images, however, since it operates quite quickly on more reasonably sized images.

Download Tile Cutter 1.0. Feedback, as always, is welcome.

Selasa, 28 September 2010

Experts == Idiots?

It's sad, really, the state of technology journalism. But you knew that, already. I should know to enough ignore link-baiting crappy journalism, especially those that do little more than republish some company's press release. Most days, I do.

Not today, though.

Computerworld posted an article with this headline today: Devs bet big on Android over Apple's iOS.

Wow, really? That seems surprising. What could possibly justify such a claim? Some proof that developers are leaving iOS in droves? Some new data about the Android Marketplace is actually making decent money for a substantial portion of Android developers? No, though it is encouraging to see that the Marketplace opened up to 13 new countries today. Still another 60 or so to go, but it's a step in the right direction. But that fact's not even mentioned in this article.

So what justifies such a grandiose claim? A survey of Appcelerator Titanium developers commissioned by Appcelerator. Now, if you don't know, Appcelerator Titanium is a cross-platform framework that allows you to develop an app once and generate applications for multiple paltforms: iOS, Android, Linux, Mac OS, and Windows.

Leaving aside my personal opinions about cross-platform tools, the article has already strayed from the headline. Devs? Well, sure, they're devs, but they're not representative of devs in general as the headline would imply. They're developers who have specifically chosen one option, and it's an option that doesn't tie them to a platform at all. We're talking about a group of people that have already shown their willingness to hedge their bets and who aren't about to take the risk of hitching their wagon to a single platform.

It's an inherently skewed sample. If you ran that same survey past 15,000 dedicated iOS developers, or dedicated Android or Blackberry or .Net developers, you'd get drastically different results.

In other words, this survey has no value whatsoever except to Accelerator. To them, it's perhaps useful for helping them decide where to devote their resources in the future to keep their clients happy.

But for the world at large? Worthless.

Oh, and what constitutes "betting big" in the context of the article? Well, it's not actually addressed, but their idea of "betting big" doesn't seem to match mine. There's nothing about investments, or exclusive agreements, or anything else that involves any sort of risk whatsoever. Developers were just asked things like "how interested are you in a platform X". The "betting" didn't involve monetary investments, or time investments. These developers did nothing more than state an opinion. An anonymous opinion. Oh… those crazy rebels.

Well, I'm "betting big" that the author of this post is a hack and his soi-disant "expert" is fucking clueless.

Let's face it, nobody knows for sure where the mobile market is headed, and being a Titanium Dev doesn't make your opinion any more informed or valuable than anyone else's. Personally, I suspect Android will continue to grow in market share unless Windows Phone 7 is better than great or else Microsoft manages to one-up Google's relationship with Verizon. But, any way you cut it, this is not a replay of the PC battles. There are too many companies still in the game and who have the potential to grow market share, profit share, or whatever other metric by which you want to judge.

Despite what you may read, there is no clear market leader. Nokia is still in the lead based on total handsets, Blackberry is still in the lead based on smartphone handsets sold, Apple is kicking ass in the profits department, and Android is doing gangbusters in new sales unit, if you lump all the 100+ models of Android phones together and count free phones as sales. Plus, the market is still growing. Apple, for example, didn't increase their market share at all year over year, but they increased their units sold considerably.

Understandably, many people are counting Microsoft out of the mobile game, but I'm not. Though I'm no fan of their technology stack or their approach to business, I think the sheer size of Microsoft's advertising budget, their Enterprise-savvy sales force, and the enormous pool of existing .Net developer talent they can draw on gives them an opportunity for huge inroads. They may not capitalize on it, but it's certainly there and counting Microsoft out of the wireless market would be as foolish as counting Apple out of the game 12 years ago was. And over at HP, lots of money and time is being invested into the Pre platform, which has not been a huge commercial success, but got pretty good grades with many developers who worked with it.

The game's afoot. It's going to be fun to watch, but if you are truly a betting person, this is what you might call a high-risk scenario.

Not today, though.

Computerworld posted an article with this headline today: Devs bet big on Android over Apple's iOS.

Wow, really? That seems surprising. What could possibly justify such a claim? Some proof that developers are leaving iOS in droves? Some new data about the Android Marketplace is actually making decent money for a substantial portion of Android developers? No, though it is encouraging to see that the Marketplace opened up to 13 new countries today. Still another 60 or so to go, but it's a step in the right direction. But that fact's not even mentioned in this article.

So what justifies such a grandiose claim? A survey of Appcelerator Titanium developers commissioned by Appcelerator. Now, if you don't know, Appcelerator Titanium is a cross-platform framework that allows you to develop an app once and generate applications for multiple paltforms: iOS, Android, Linux, Mac OS, and Windows.

Leaving aside my personal opinions about cross-platform tools, the article has already strayed from the headline. Devs? Well, sure, they're devs, but they're not representative of devs in general as the headline would imply. They're developers who have specifically chosen one option, and it's an option that doesn't tie them to a platform at all. We're talking about a group of people that have already shown their willingness to hedge their bets and who aren't about to take the risk of hitching their wagon to a single platform.

It's an inherently skewed sample. If you ran that same survey past 15,000 dedicated iOS developers, or dedicated Android or Blackberry or .Net developers, you'd get drastically different results.

In other words, this survey has no value whatsoever except to Accelerator. To them, it's perhaps useful for helping them decide where to devote their resources in the future to keep their clients happy.

But for the world at large? Worthless.

Oh, and what constitutes "betting big" in the context of the article? Well, it's not actually addressed, but their idea of "betting big" doesn't seem to match mine. There's nothing about investments, or exclusive agreements, or anything else that involves any sort of risk whatsoever. Developers were just asked things like "how interested are you in a platform X". The "betting" didn't involve monetary investments, or time investments. These developers did nothing more than state an opinion. An anonymous opinion. Oh… those crazy rebels.

Well, I'm "betting big" that the author of this post is a hack and his soi-disant "expert" is fucking clueless.

Let's face it, nobody knows for sure where the mobile market is headed, and being a Titanium Dev doesn't make your opinion any more informed or valuable than anyone else's. Personally, I suspect Android will continue to grow in market share unless Windows Phone 7 is better than great or else Microsoft manages to one-up Google's relationship with Verizon. But, any way you cut it, this is not a replay of the PC battles. There are too many companies still in the game and who have the potential to grow market share, profit share, or whatever other metric by which you want to judge.

Despite what you may read, there is no clear market leader. Nokia is still in the lead based on total handsets, Blackberry is still in the lead based on smartphone handsets sold, Apple is kicking ass in the profits department, and Android is doing gangbusters in new sales unit, if you lump all the 100+ models of Android phones together and count free phones as sales. Plus, the market is still growing. Apple, for example, didn't increase their market share at all year over year, but they increased their units sold considerably.

Understandably, many people are counting Microsoft out of the mobile game, but I'm not. Though I'm no fan of their technology stack or their approach to business, I think the sheer size of Microsoft's advertising budget, their Enterprise-savvy sales force, and the enormous pool of existing .Net developer talent they can draw on gives them an opportunity for huge inroads. They may not capitalize on it, but it's certainly there and counting Microsoft out of the wireless market would be as foolish as counting Apple out of the game 12 years ago was. And over at HP, lots of money and time is being invested into the Pre platform, which has not been a huge commercial success, but got pretty good grades with many developers who worked with it.

The game's afoot. It's going to be fun to watch, but if you are truly a betting person, this is what you might call a high-risk scenario.

Jumat, 24 September 2010

More on dealloc

I really didn't expect to kick off such a shitstorm yesterday with my dealloc post. A few people accused me of proselytizing the practice of nil'ing ivars, at least for release code. Possibly I did, but my real intention was to share the reasons why you might choose one approach over the other, not to say "you should do it this way." I mostly wrote the blog post to make sure I had my head fully wrapped around the debate because it had come up during the revising of Beginning iPhone Development.

Daniel Jalkut responded with a very lucid write-up that represents one of the common points of view on this matter. That view might best be summed up as "crash, baby, crash". If you want to find bugs, the best thing you can do is crash when they happen, that way you know there's a problem and know to look for it. This is not a new idea. I seem to remember a system extension back in the old Mac System 6 or System 7 days that would actually cause your system to crash if your app did a certain bad thing, even if that bad thing didn't cause any noticeable problems in your app. I don't honestly remember the specifics, but it was something to do with the trapping mechanism, I think (anybody know what I'm talking about??). Of course, Apple didn't ship that extension to non-developers.

What makes this a difficult argument is that Daniel is absolutely right… sometimes. There are scenarios where crashing is much better than, say, data loss or data integrity loss. General rules are always problematic, but though I see his point, I'm sticking with "not crashing on customers" being a good rule generally… except when it's not.

In interest of full disclosure, there's actually a third dealloc pattern, albeit a distant third in popularity, but it has definitely become more popular than when I first head of it. In this approach, you assign your instance variable to a stack local variable, then nil the instance variable before releasing it, like so:

In the release-then-nil approach, after you release, there's a tiny window between the release and the assignment of nil where, in multithreaded code, the invalid pointer could still be theoretically be used. This approach prevents that problem by nil'ing first. This point of view represents the opposite end of the spectrum from the one Daniel stated. In this approach, your goal is to code defensively and never let your app crash if you can help it, even in development. If you're a cautious programmer and believe in the Tao of Defensive Programming, then there you go - that's your approach to dealloc.

For me, personally (warning - I'm about to state an opinion) I can't justify the extra code and time of the defensive approach. It's preemptive treatment of a problem so rare that it's almost silly that this discussion is even happening. I've been writing Objective-C since 1999 and I've only once seen a scenario where the dealloc approach would've made a difference in the actual behavior of the application, and that was a scenario created for a debugging exercise in a workshop, so we're really splitting hairs here.

So, here's my final word on dealloc:

After all, you're the one who has to live with the consequences of your decision.

Daniel Jalkut responded with a very lucid write-up that represents one of the common points of view on this matter. That view might best be summed up as "crash, baby, crash". If you want to find bugs, the best thing you can do is crash when they happen, that way you know there's a problem and know to look for it. This is not a new idea. I seem to remember a system extension back in the old Mac System 6 or System 7 days that would actually cause your system to crash if your app did a certain bad thing, even if that bad thing didn't cause any noticeable problems in your app. I don't honestly remember the specifics, but it was something to do with the trapping mechanism, I think (anybody know what I'm talking about??). Of course, Apple didn't ship that extension to non-developers.

What makes this a difficult argument is that Daniel is absolutely right… sometimes. There are scenarios where crashing is much better than, say, data loss or data integrity loss. General rules are always problematic, but though I see his point, I'm sticking with "not crashing on customers" being a good rule generally… except when it's not.

In interest of full disclosure, there's actually a third dealloc pattern, albeit a distant third in popularity, but it has definitely become more popular than when I first head of it. In this approach, you assign your instance variable to a stack local variable, then nil the instance variable before releasing it, like so:

- (void)dealloc

{

id fooTemp = foo;

foo = nil;

[fooTemp release];

}

In the release-then-nil approach, after you release, there's a tiny window between the release and the assignment of nil where, in multithreaded code, the invalid pointer could still be theoretically be used. This approach prevents that problem by nil'ing first. This point of view represents the opposite end of the spectrum from the one Daniel stated. In this approach, your goal is to code defensively and never let your app crash if you can help it, even in development. If you're a cautious programmer and believe in the Tao of Defensive Programming, then there you go - that's your approach to dealloc.

For me, personally (warning - I'm about to state an opinion) I can't justify the extra code and time of the defensive approach. It's preemptive treatment of a problem so rare that it's almost silly that this discussion is even happening. I've been writing Objective-C since 1999 and I've only once seen a scenario where the dealloc approach would've made a difference in the actual behavior of the application, and that was a scenario created for a debugging exercise in a workshop, so we're really splitting hairs here.

So, here's my final word on dealloc:

- If you already have a strong opinion on which one to use for the type of coding you do, use that one. If not…

- If you prefer that bugs always crash your app so you know about them, use the traditional release-only approach

- If you prefer not to crash if you can help it and prefer to find your bugs using other means, use the newer approach

- If you want your app to crash for you, but not your customers, use the MCRelease() macro or use #if debug pre-processor directives

After all, you're the one who has to live with the consequences of your decision.

Kamis, 23 September 2010

Dealloc

Last week there was a bit of a Twitter in-fight in the iOS community over the "right" way to release your instance variables in dealloc. I think Rob actually started it, to be honest, but I probably shouldn't be bringing that up.

Basically, several developers were claiming that there's never a reason to set an instance variable to nil in dealloc, while others were arguing that you should always do so.

To me, there didn't seem to be a clear and compelling winner between the two approaches. I've used both in my career. However, since we're in the process of trying to decide which approach to use in the next edition of Beginning iPhone Development, I reached out to Apple's Developer Tools Evangelist, Michael Jurewitz, to see if there was an official or recommended approach to handling instance variables in dealloc.

Other than the fact that you should never, ever use mutators in dealloc (or init, for that matter), Apple does not have an official recommendation on the subject.

However, Michael and Matt Drance of Bookhouse Software and a former Evangelist himself, had discussed this issue extensively last week. They kindly shared their conclusions with me and said it was okay for me to turn it into a blog post. So, here it is. Hopefully, I've captured everything correctly.

In a multi-threaded environment, however, there is a very real possibility that the pointer will be accessed between the time that it is deallocated and the time that its object is done being deallocated. Generally speaking, this is only going to happen if you've got a bug elsewhere in your code, but let's face it, you may very well. Anyone who codes on the assumption that all of their code is perfect is begging for a smackdown, and Xcode's just biding its time waiting for the opportunity.

In the first approach, your application will usually crash with an EXC_BAD_ACCESS, though you could also end up with any manner of odd behavior (which we call a "heisenbug") if the released object is deallocated and its memory is then reused for another object owned by your application. In those cases, you may get a selector not recognized exception when the message is sent to the deallocated object, or you may simply get unexpected behavior from the same method being called on the wrong object.

In the other approach, your application will quietly send a message to nil and go about its merry way, generally without a crash or any other immediately identifiable problem.

The former approach is actually good when you're developing, debugging, and doing unit testing, because it makes it easier to find your problematic code. On the other hand, that approach is really, really bad in a shipping application because you really don't want to crash on your users if you can avoid it.

The latter approach, conversely, can hide bugs during development, but handles those bugs more gracefully when they happen, and you're far less likely to have your application go up in a big ball of fire in front of your users.

If you want your application to crash when a released pointer is accessed during development and debugging, the solution is to use the traditional approach in your debug configuration. If you want your application to degrade gracefully for your users, the solution is to use the newer approach in your release and ad hoc configurations.

One somewhat pedantic implementation of this approach would be this:

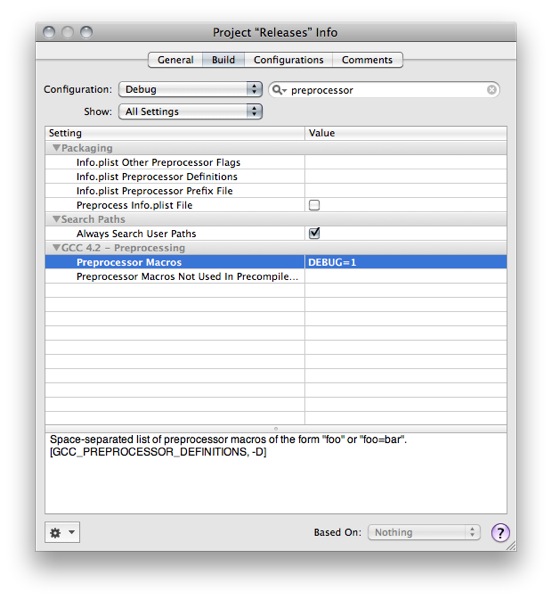

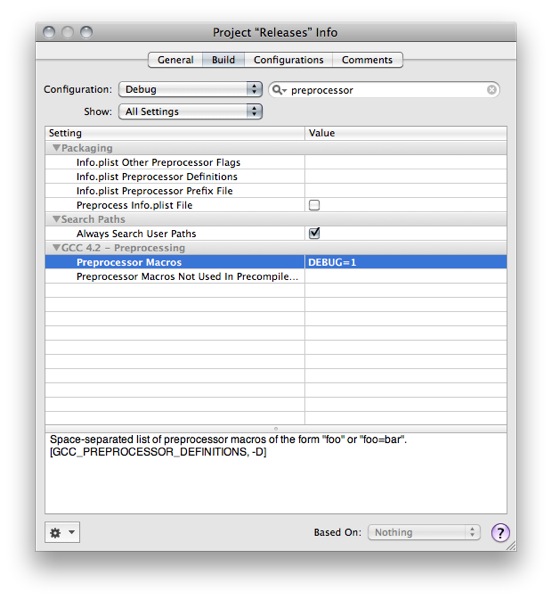

That code assumes that your debug configuration has a precompiler definition of DEBUG, which you usually have to add to your Xcode project - most Xcode project templates do not provide it for you. There are several ways you can add it, but I typically just use the Preprocessor Macros setting in the project's Build Configuration:

Although the code above does, indeed, give us the best of both worlds - a crash during development and debugging and graceful degrading for customers - it at least doubles the amount of code we have to write in every class. We can do better than that, though. How about a little macro magic? If we add the following macro to our project's .pch file:

We can then use that macro in dealloc, and our best-of-both-worlds code becomes much shorter and more readable:

Once you've got the macro in your project, this option is actually no more work or typing than either of the other dealloc methods.

But, you know what? If you want to keep doing it the way you've always done it, it's really fine, regardless of which way you do it. If you're consistent in your use and are aware of the tradeoffs, there's really no compelling reason to use one over the other outside of personal preference.

So, in other words, it's kind of a silly thing for us all to argue over, especially when there's already politics, religions, and sports to fill that role.

While it is true that in Java and some other languages with garbage collection, nulling out a pointer that you're done with helps the garbage collector know that you're done with that object, so it's not altogether unlikely that Objective-C's garbage collector does the same thing, however any benefit to nil'ing out instance variables once we get garbage collection in iOS would be marginal at best. I haven't been able to find anything authoritative that supports this claim, but even if it's 100% true, it seems likely that when the owning object is deallocated a few instructions later, the garbage collector will realize that the deallocated object is done with anything it was using.

If there is a benefit in this scenario, which seems unlikely, it seems like it would be such a tiny difference that it wouldn't even make sense to factor it into your decision. That being said, I have no firm evidence one way or the other on this issue and would welcome being enlightened.

After writing this, Michael Jurewitz pinged me to confirmed that there's absolutely no benefit in terms of GC to releasing in dealloc, though it is otherwise a good idea to nil pointers you're done with under GC..

Thanks to @borkware and @eridius for pointing out some issues

Update: Don't miss Daniel Jalkut's excellent response.

Basically, several developers were claiming that there's never a reason to set an instance variable to nil in dealloc, while others were arguing that you should always do so.

To me, there didn't seem to be a clear and compelling winner between the two approaches. I've used both in my career. However, since we're in the process of trying to decide which approach to use in the next edition of Beginning iPhone Development, I reached out to Apple's Developer Tools Evangelist, Michael Jurewitz, to see if there was an official or recommended approach to handling instance variables in dealloc.

Other than the fact that you should never, ever use mutators in dealloc (or init, for that matter), Apple does not have an official recommendation on the subject.

However, Michael and Matt Drance of Bookhouse Software and a former Evangelist himself, had discussed this issue extensively last week. They kindly shared their conclusions with me and said it was okay for me to turn it into a blog post. So, here it is. Hopefully, I've captured everything correctly.

The Two Major Approachs

Just to make sure we're all on the same page, let's look at the two approaches that made up the two different sides of the argument last week.Just Release

The more traditional approach is to simply release your instance variables and leave them pointing to the released (and potentially deallocated) object, like so:- (void)dealloc

{

[Sneezy release];

[Sleepy release];

[Dopey release];

[Doc release];

[Happy release];

[Bashful release];

[Grumpy release];

[super dealloc];

}

In this approach, each of the pointers will be pointing to a potentialy invalid object for a very short period of time — until the method returns — at which point the instance variable will disappear along with its owning object. In a single-threaded application (the pointer would have to be accessed by something triggered by code in either this object's implementation of dealloc or in the dealloc of one of its superclasses, there's very little chance that the instance variables will be used before they go away , which is probably what has led to the proclamations made by several that there's "no value in setting instance variables to nil" in dealloc. In a multi-threaded environment, however, there is a very real possibility that the pointer will be accessed between the time that it is deallocated and the time that its object is done being deallocated. Generally speaking, this is only going to happen if you've got a bug elsewhere in your code, but let's face it, you may very well. Anyone who codes on the assumption that all of their code is perfect is begging for a smackdown, and Xcode's just biding its time waiting for the opportunity.

Release and nil

In the last few years, another approach to dealloc has become more common. In this approach, you release your instance variable and then immediately set them to nil before releasing the next instance variable. It's common to actually put the release and the assignment to nil on the same line separated by a comma rather than on consecutive lines separated by semicolons, though that's purely stylistic and has no affect on the way the code is compiled. Here's what our previous dealloc method might look like using this approach:- (void)dealloc

{

[sneezy release], sneezy = nil;

[sleepy release], sleepy = nil;

[dopey release], dopey = nil;

[doc release], doc = nil;

[happy release], happy = nil;

[bashful release], bashful = nil;

[grumpy release], grumpy = nil;

[super dealloc];

}

In this case, if some piece of code accesses a pointer between the time that dealloc begins and the object is actually deallocated, it will almost certainly fail gracefully because sending messages to nil is perfectly okay in Objective-C. However, you're doing a tiny bit of extra work by assigning nil to a bunch of pointers that are going to go away momentarily, and you're creating a little bit of extra typing for yourself in every class.The Showdown

So, here's the real truth of the matter: The vast majority of the time, it's not going to make any noticeable difference whatsoever. If you're not accessing instance variables that have been released, there's simply not going to be any difference in the behavior between the two approaches. If you are, however, then the question is: what do you want to happen when your code does that bad thing?In the first approach, your application will usually crash with an EXC_BAD_ACCESS, though you could also end up with any manner of odd behavior (which we call a "heisenbug") if the released object is deallocated and its memory is then reused for another object owned by your application. In those cases, you may get a selector not recognized exception when the message is sent to the deallocated object, or you may simply get unexpected behavior from the same method being called on the wrong object.

In the other approach, your application will quietly send a message to nil and go about its merry way, generally without a crash or any other immediately identifiable problem.

The former approach is actually good when you're developing, debugging, and doing unit testing, because it makes it easier to find your problematic code. On the other hand, that approach is really, really bad in a shipping application because you really don't want to crash on your users if you can avoid it.

The latter approach, conversely, can hide bugs during development, but handles those bugs more gracefully when they happen, and you're far less likely to have your application go up in a big ball of fire in front of your users.

The Winner?

There really isn't a clear cut winner, which is probably why Apple doesn't have an official recommendation or stance. During their discussion, Matt and Michael came up with a "best of both worlds" solution, but it requires a fair bit of extra code over either of the common approaches.If you want your application to crash when a released pointer is accessed during development and debugging, the solution is to use the traditional approach in your debug configuration. If you want your application to degrade gracefully for your users, the solution is to use the newer approach in your release and ad hoc configurations.

One somewhat pedantic implementation of this approach would be this:

- (void)dealloc

{

#if DEBUG

[Sneezy release];

[Sleepy release];

[Dopey release];

[Doc release];

[Happy release];

[Bashful release];

[Grumpy release];

[super dealloc];

#else

[sneezy release], sneezy = nil;

[sleepy release], sleepy = nil;

[dopey release], dopey = nil;

[doc release], doc = nil;

[happy release], happy = nil;

[bashful release], bashful = nil;

[grumpy release], grumpy = nil;

[super dealloc];

#endif

}

That code assumes that your debug configuration has a precompiler definition of DEBUG, which you usually have to add to your Xcode project - most Xcode project templates do not provide it for you. There are several ways you can add it, but I typically just use the Preprocessor Macros setting in the project's Build Configuration:

Although the code above does, indeed, give us the best of both worlds - a crash during development and debugging and graceful degrading for customers - it at least doubles the amount of code we have to write in every class. We can do better than that, though. How about a little macro magic? If we add the following macro to our project's .pch file:

#if DEBUG

#define MCRelease(x) [x release]

#else

#define MCRelease(x) [x release], x = nil

#endifWe can then use that macro in dealloc, and our best-of-both-worlds code becomes much shorter and more readable:

- (void)dealloc

{

MCRelease(sneezy);

MCRelease(sleepy);

MCRelease(dopey);

MCRelease(doc);

MCRelease(happy);

MCRelease(bashful);

MCRelease(grumpy);

[super dealloc];

}Once you've got the macro in your project, this option is actually no more work or typing than either of the other dealloc methods.

But, you know what? If you want to keep doing it the way you've always done it, it's really fine, regardless of which way you do it. If you're consistent in your use and are aware of the tradeoffs, there's really no compelling reason to use one over the other outside of personal preference.

So, in other words, it's kind of a silly thing for us all to argue over, especially when there's already politics, religions, and sports to fill that role.

The Garbage Collection Angle

There's one last point I want to address. I've heard a few times from different people that setting an instance variable to nil in dealloc acts as a hint to the garbage collector when you're using the allowed-not-required GC option (when the required option is being used, dealloc isn't even called, finalize is). If this were true, forward compatibility would be another possible argument for preferring the newer approach to dealloc over the traditional approach.While it is true that in Java and some other languages with garbage collection, nulling out a pointer that you're done with helps the garbage collector know that you're done with that object, so it's not altogether unlikely that Objective-C's garbage collector does the same thing, however any benefit to nil'ing out instance variables once we get garbage collection in iOS would be marginal at best. I haven't been able to find anything authoritative that supports this claim, but even if it's 100% true, it seems likely that when the owning object is deallocated a few instructions later, the garbage collector will realize that the deallocated object is done with anything it was using.

If there is a benefit in this scenario, which seems unlikely, it seems like it would be such a tiny difference that it wouldn't even make sense to factor it into your decision. That being said, I have no firm evidence one way or the other on this issue and would welcome being enlightened.

After writing this, Michael Jurewitz pinged me to confirmed that there's absolutely no benefit in terms of GC to releasing in dealloc, though it is otherwise a good idea to nil pointers you're done with under GC..

Thanks to @borkware and @eridius for pointing out some issues

Update: Don't miss Daniel Jalkut's excellent response.

Selasa, 21 September 2010

Complaining About Success

If you want to see how little sympathy you can get from those around you, try complaining about being successful. It just doesn't tend to be a problem over which people shed tears on your behalf. But I'm actually not here to complain. I'm really thrilled that MartianCraft is growing steadily and, more importantly, that we're getting interesting work.

I did think it was worth popping in here to explain my absence of late, however. I've got several partially written posts sitting in MarsEdit, including a couple for my recently-started and just-as-lonely personal blog. But time has become a scarce commodity for me, and will likely remain that way for at least the next month or two, possibly even longer. Even my OpenGL ES book for prags has suffered lately as I've been basically working double-time trying to catch up on my workload. Even the move to Florida is being affected, as we probably won't get our house on the market now until the new year.

The fact that I've been doing so much coding does mean that I've got some great ideas for blog posts, so hopefully once the dust settles, I'll have some goodies for you, but bear with me in the meantime.

Oh, and a somewhat related note. A client of MartianCraft is looking for a really mobile developer to work in New York City. They'll be happy with any mobile experience, with preference for iPhone or Android experience. Having both is ideal. This client is a very large media company and you'd basically be responsible for leading one division's mobile efforts, starting with maintaining their existing apps, but eventually eventually extending them and building new ones. It's a great opportunity for the right person. If you're in New York or willing to relocate there and have some good mobile chops, drop me an e-mail with your resume (my main e-mail is my twitter name at mac dot com or you can send them to jeff at martiancraft dot com) and I'll put it in front of the hiring manager.

I did think it was worth popping in here to explain my absence of late, however. I've got several partially written posts sitting in MarsEdit, including a couple for my recently-started and just-as-lonely personal blog. But time has become a scarce commodity for me, and will likely remain that way for at least the next month or two, possibly even longer. Even my OpenGL ES book for prags has suffered lately as I've been basically working double-time trying to catch up on my workload. Even the move to Florida is being affected, as we probably won't get our house on the market now until the new year.

The fact that I've been doing so much coding does mean that I've got some great ideas for blog posts, so hopefully once the dust settles, I'll have some goodies for you, but bear with me in the meantime.

Oh, and a somewhat related note. A client of MartianCraft is looking for a really mobile developer to work in New York City. They'll be happy with any mobile experience, with preference for iPhone or Android experience. Having both is ideal. This client is a very large media company and you'd basically be responsible for leading one division's mobile efforts, starting with maintaining their existing apps, but eventually eventually extending them and building new ones. It's a great opportunity for the right person. If you're in New York or willing to relocate there and have some good mobile chops, drop me an e-mail with your resume (my main e-mail is my twitter name at mac dot com or you can send them to jeff at martiancraft dot com) and I'll put it in front of the hiring manager.

12.55

12.55

ipod touch review

ipod touch review