Last week there was a bit of a Twitter in-fight in the iOS community over the "right" way to release your instance variables in

dealloc. I think

Rob actually started it, to be honest, but I probably shouldn't be bringing that up.

Basically, several developers were claiming that there's never a reason to set an instance variable to

nil in

dealloc, while others were arguing that you should always do so.

To me, there didn't seem to be a clear and compelling winner between the two approaches. I've used both in my career. However, since we're in the process of trying to decide which approach to use in the next edition of Beginning iPhone Development, I reached out to Apple's Developer Tools Evangelist,

Michael Jurewitz, to see if there was an official or recommended approach to handling instance variables in

dealloc.

Other than the fact that you should

never, ever use mutators in

dealloc (or

init, for that matter), Apple does not have an official recommendation on the subject.

However, Michael and

Matt Drance of

Bookhouse Software and a former Evangelist himself, had discussed this issue extensively last week. They kindly shared their conclusions with me and said it was okay for me to turn it into a blog post. So, here it is. Hopefully, I've captured everything correctly.

The Two Major Approachs

Just to make sure we're all on the same page, let's look at the two approaches that made up the two different sides of the argument last week.

Just Release

The more traditional approach is to simply release your instance variables and leave them pointing to the released (and potentially deallocated) object, like so:

- (void)dealloc

{

[Sneezy release];

[Sleepy release];

[Dopey release];

[Doc release];

[Happy release];

[Bashful release];

[Grumpy release];

[super dealloc];

}

In this approach, each of the pointers will be pointing to a potentialy invalid object for a very short period of time — until the method returns — at which point the instance variable will disappear along with its owning object. In a single-threaded application (the pointer would have to be accessed by something triggered by code in either this object's implementation of

dealloc or in the

dealloc of one of its superclasses, there's very little chance that the instance variables will be used before they go away , which is probably what has led to the proclamations made by several that there's "no value in setting instance variables to

nil" in

dealloc.

In a multi-threaded environment, however, there is a very real possibility that the pointer will be accessed between the time that it is deallocated and the time that its object is done being deallocated. Generally speaking, this is only going to happen if you've got a bug elsewhere in your code, but let's face it, you may very well. Anyone who codes on the assumption that all of their code is perfect is begging for a smackdown, and Xcode's just biding its time waiting for the opportunity.

Release and nil

In the last few years, another approach to

dealloc has become more common. In this approach, you release your instance variable and then immediately set them to

nil before releasing the next instance variable. It's common to actually put the

release and the assignment to

nil on the same line separated by a comma rather than on consecutive lines separated by semicolons, though that's purely stylistic and has no affect on the way the code is compiled. Here's what our previous

dealloc method might look like using this approach:

- (void)dealloc

{

[sneezy release], sneezy = nil;

[sleepy release], sleepy = nil;

[dopey release], dopey = nil;

[doc release], doc = nil;

[happy release], happy = nil;

[bashful release], bashful = nil;

[grumpy release], grumpy = nil;

[super dealloc];

}

In this case, if some piece of code accesses a pointer between the time that

dealloc begins and the object is actually deallocated, it will almost certainly fail gracefully because sending messages to

nil is perfectly okay in Objective-C. However, you're doing a tiny bit of extra work by assigning

nil to a bunch of pointers that are going to go away momentarily, and you're creating a little bit of extra typing for yourself in every class.

The Showdown

So, here's the real truth of the matter: The vast majority of the time, it's not going to make any noticeable difference whatsoever. If you're not accessing instance variables that have been released, there's simply not going to be any difference in the behavior between the two approaches. If you are, however, then the question is: what do you want to happen when your code does that bad thing?

In the first approach, your application will usually crash with an

EXC_BAD_ACCESS, though you could also end up with any manner of odd behavior (which we call a "heisenbug") if the released object is deallocated and its memory is then reused for another object owned by your application. In those cases, you may get a

selector not recognized exception when the message is sent to the deallocated object, or you may simply get unexpected behavior from the same method being called on the wrong object.

In the other approach, your application will quietly send a message to

nil and go about its merry way, generally without a crash or any other immediately identifiable problem.

The former approach is actually good when you're developing, debugging, and doing unit testing, because it makes it easier to find your problematic code. On the other hand, that approach is really, really bad in a shipping application because you really don't want to crash on your users if you can avoid it.

The latter approach, conversely, can hide bugs during development, but handles those bugs more gracefully when they happen, and you're far less likely to have your application go up in a big ball of fire in front of your users.

The Winner?

There really isn't a clear cut winner, which is probably why Apple doesn't have an official recommendation or stance. During their discussion, Matt and Michael came up with a "best of both worlds" solution, but it requires a fair bit of extra code over either of the common approaches.

If you want your application to crash when a released pointer is accessed during development and debugging, the solution is to use the traditional approach in your debug configuration. If you want your application to degrade gracefully for your users, the solution is to use the newer approach in your release and ad hoc configurations.

One somewhat pedantic implementation of this approach would be this:

- (void)dealloc

{

#if DEBUG

[Sneezy release];

[Sleepy release];

[Dopey release];

[Doc release];

[Happy release];

[Bashful release];

[Grumpy release];

[super dealloc];

#else

[sneezy release], sneezy = nil;

[sleepy release], sleepy = nil;

[dopey release], dopey = nil;

[doc release], doc = nil;

[happy release], happy = nil;

[bashful release], bashful = nil;

[grumpy release], grumpy = nil;

[super dealloc];

#endif

}

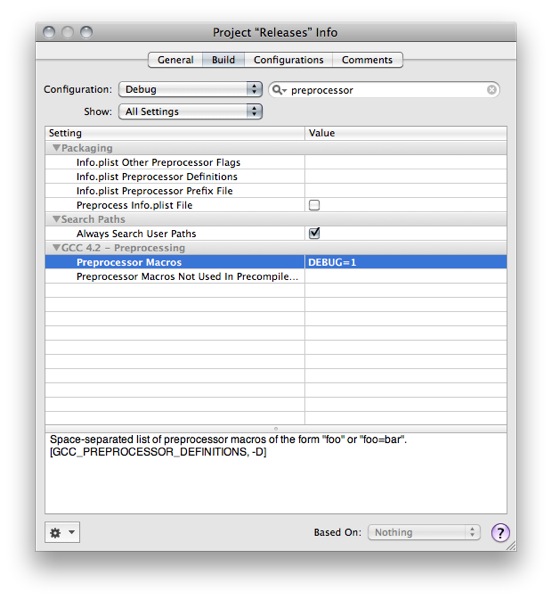

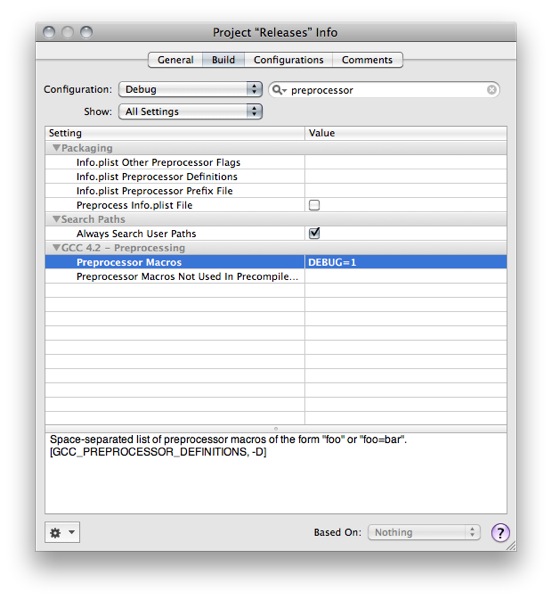

That code assumes that your debug configuration has a precompiler definition of

DEBUG, which you usually have to add to your Xcode project - most Xcode project templates do not provide it for you. There are several ways you can add it, but I typically just use the

Preprocessor Macros setting in the project's Build Configuration:

Although the code above does, indeed, give us the best of both worlds - a crash during development and debugging and graceful degrading for customers - it at least doubles the amount of code we have to write in every class. We can do better than that, though. How about a little macro magic? If we add the following macro to our project's .pch file:

#if DEBUG

#define MCRelease(x) [x release]

#else

#define MCRelease(x) [x release], x = nil

#endif

We can then use that macro in

dealloc, and our best-of-both-worlds code becomes much shorter and more readable:

- (void)dealloc

{

MCRelease(sneezy);

MCRelease(sleepy);

MCRelease(dopey);

MCRelease(doc);

MCRelease(happy);

MCRelease(bashful);

MCRelease(grumpy);

[super dealloc];

}

Once you've got the macro in your project, this option is actually no more work or typing than either of the other

dealloc methods.

But, you know what? If you want to keep doing it the way you've always done it, it's really fine, regardless of which way you do it. If you're consistent in your use and are aware of the tradeoffs, there's really no compelling reason to use one over the other outside of personal preference.

So, in other words, it's kind of a silly thing for us all to argue over, especially when there's already politics, religions, and sports to fill that role.

The Garbage Collection Angle

There's one last point I want to address. I've heard a few times from different people that setting an instance variable to

nil in

dealloc acts as a hint to the garbage collector when you're using the allowed-not-required GC option (when the required option is being used,

dealloc isn't even called,

finalize is). If this were true, forward compatibility would be another possible argument for preferring the newer approach to

dealloc over the traditional approach.

While it is true that in Java and some other languages with garbage collection,

nulling out a pointer that you're done with helps the garbage collector know that you're done with that object, so it's not altogether unlikely that Objective-C's garbage collector does the same thing, however any benefit to

nil'ing out instance variables once we get garbage collection in iOS would be marginal at best. I haven't been able to find anything authoritative that supports this claim, but even if it's 100% true, it seems likely that when the owning object is deallocated a few instructions later, the garbage collector will realize that the deallocated object is done with anything it was using.

If there is a benefit in this scenario, which seems unlikely, it seems like it would be such a tiny difference that it wouldn't even make sense to factor it into your decision. That being said, I have no firm evidence one way or the other on this issue and would welcome being enlightened.

After writing this, Michael Jurewitz pinged me to confirmed that there's absolutely no benefit in terms of GC to releasing in dealloc, though it is otherwise a good idea to nil pointers you're done with under GC..Thanks to @borkware and @eridius for pointing out some issuesUpdate: Don't miss Daniel Jalkut's excellent

response.

08.30

08.30

ipod touch review

ipod touch review